Leveraged Mining

Designing a compliance-first mining management portal

TL;DR

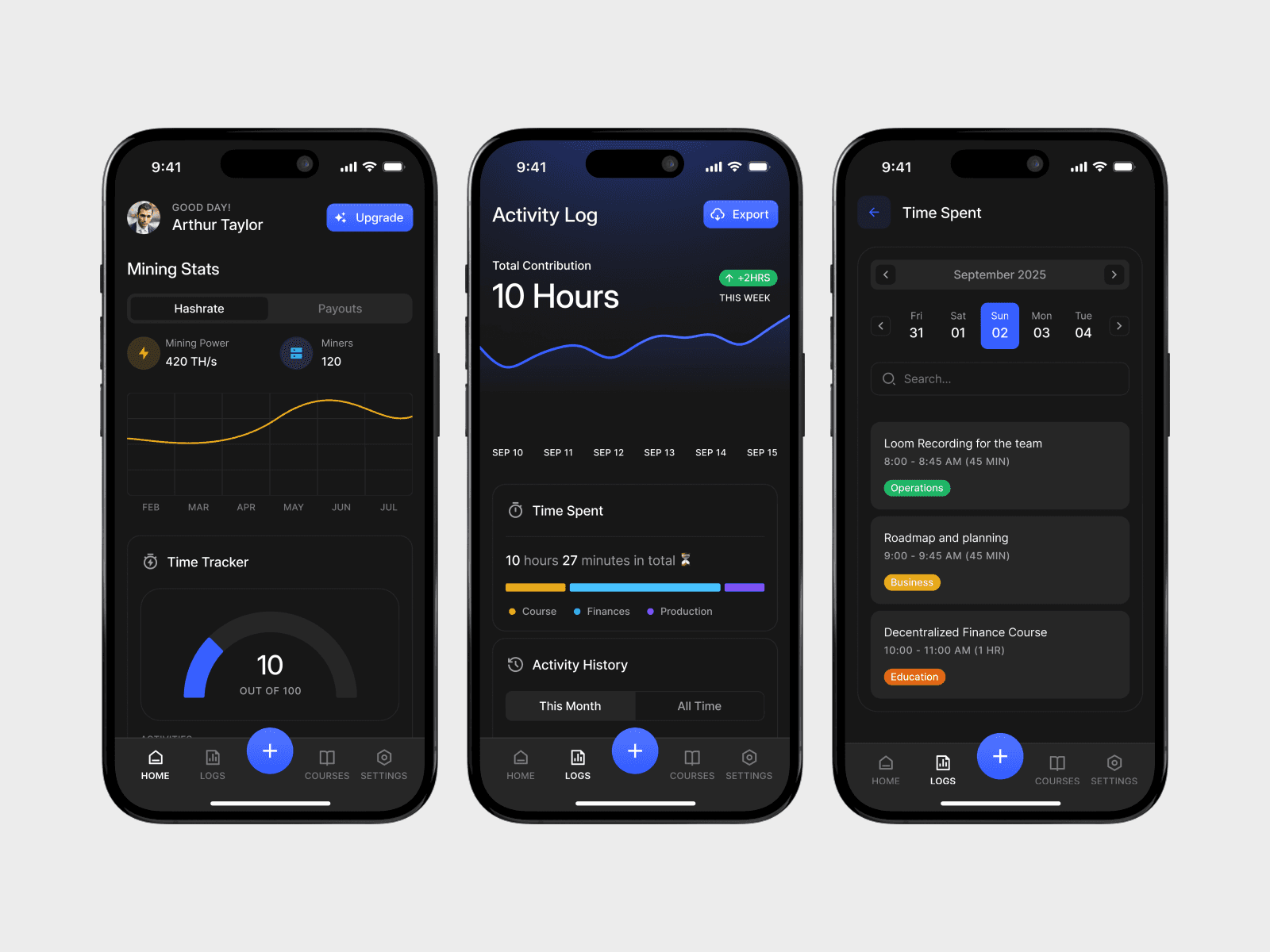

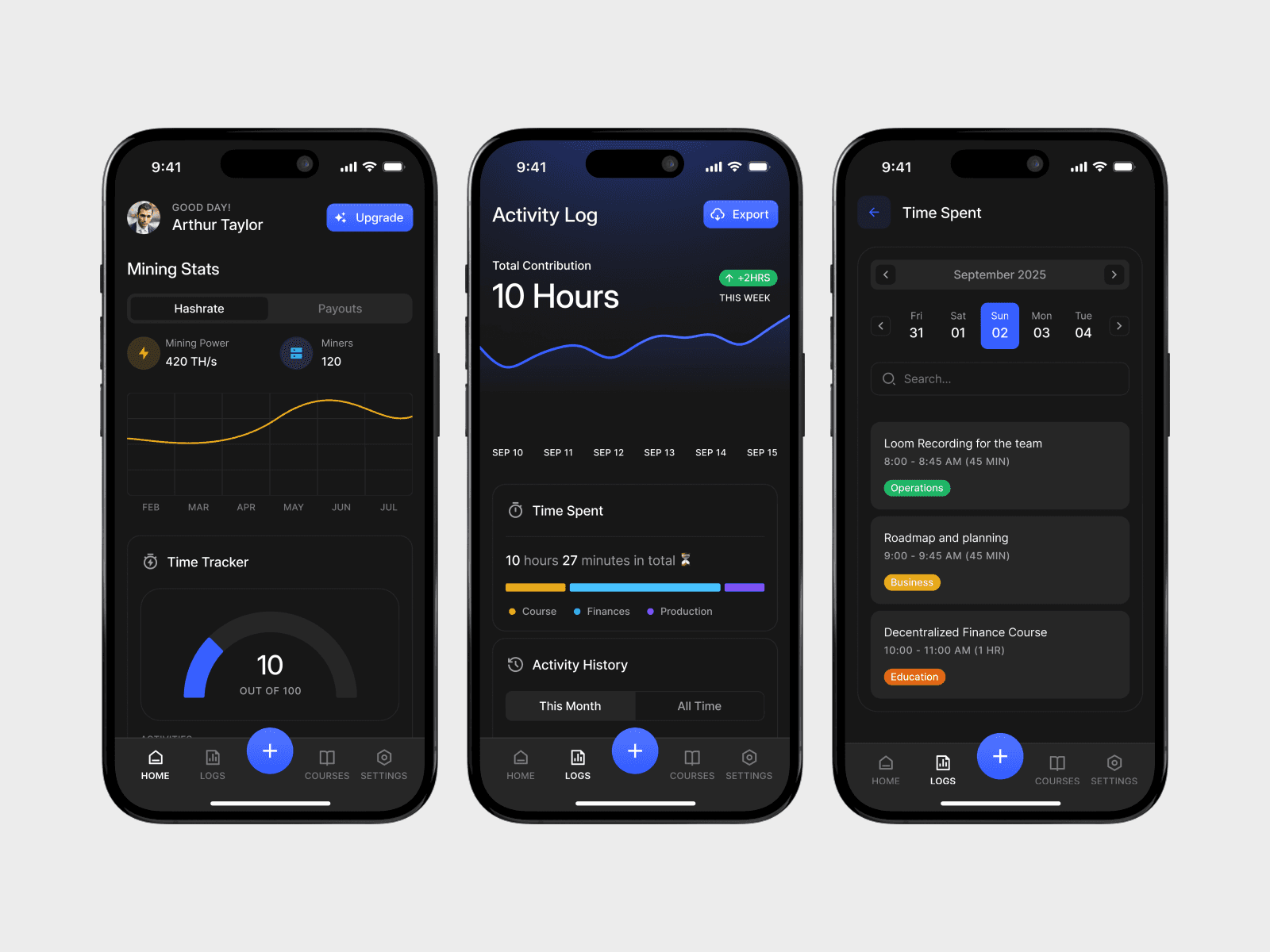

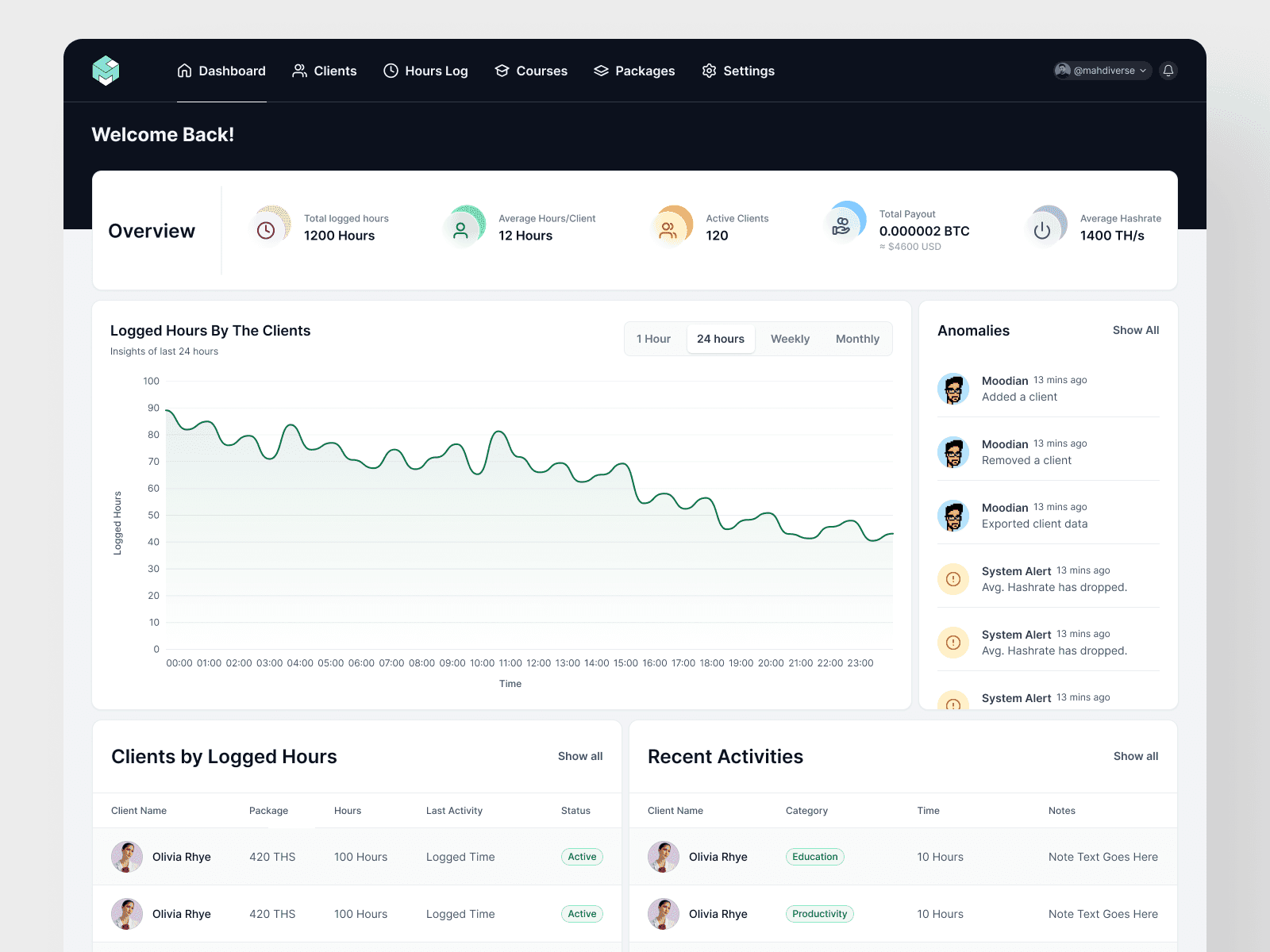

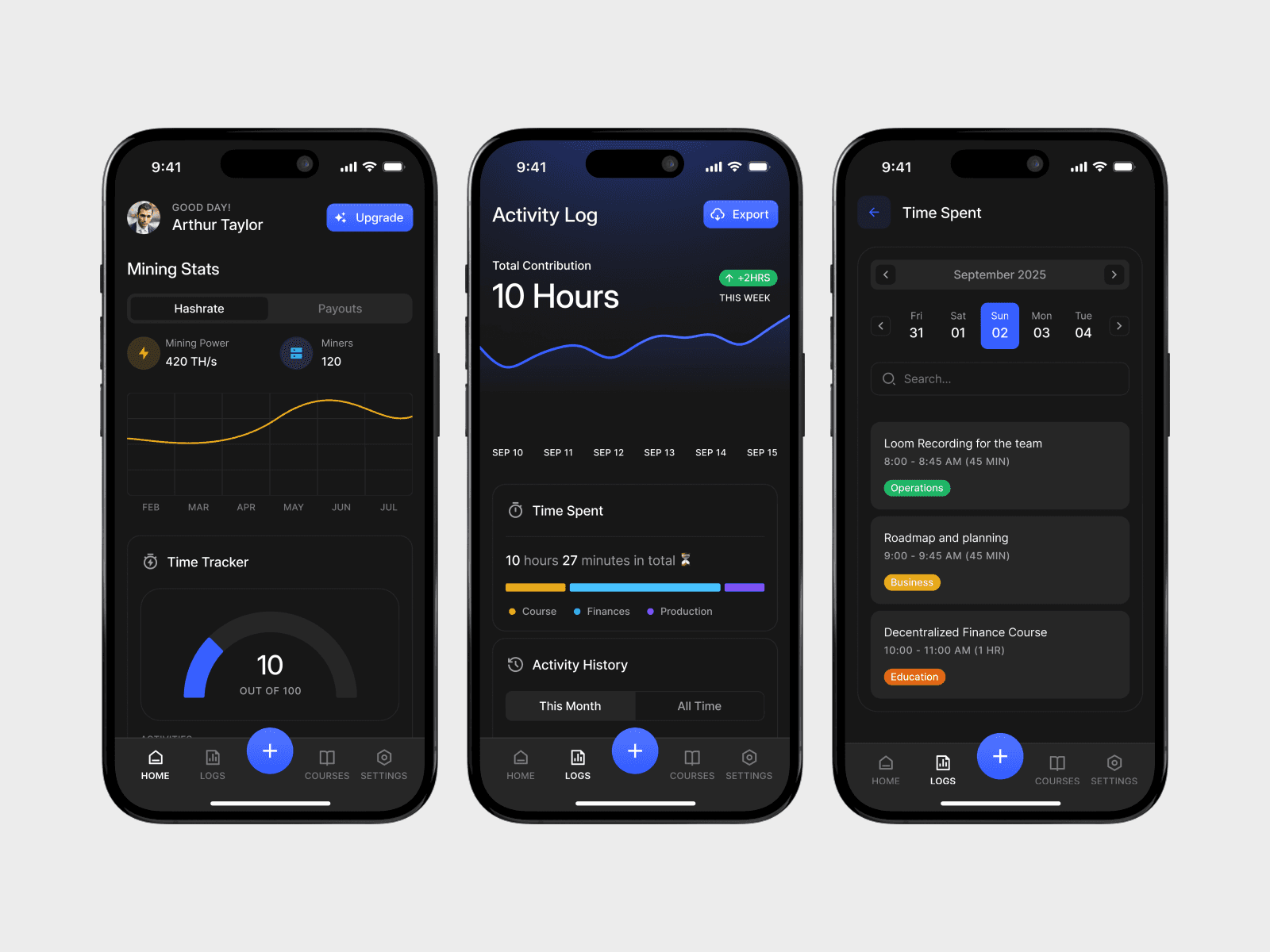

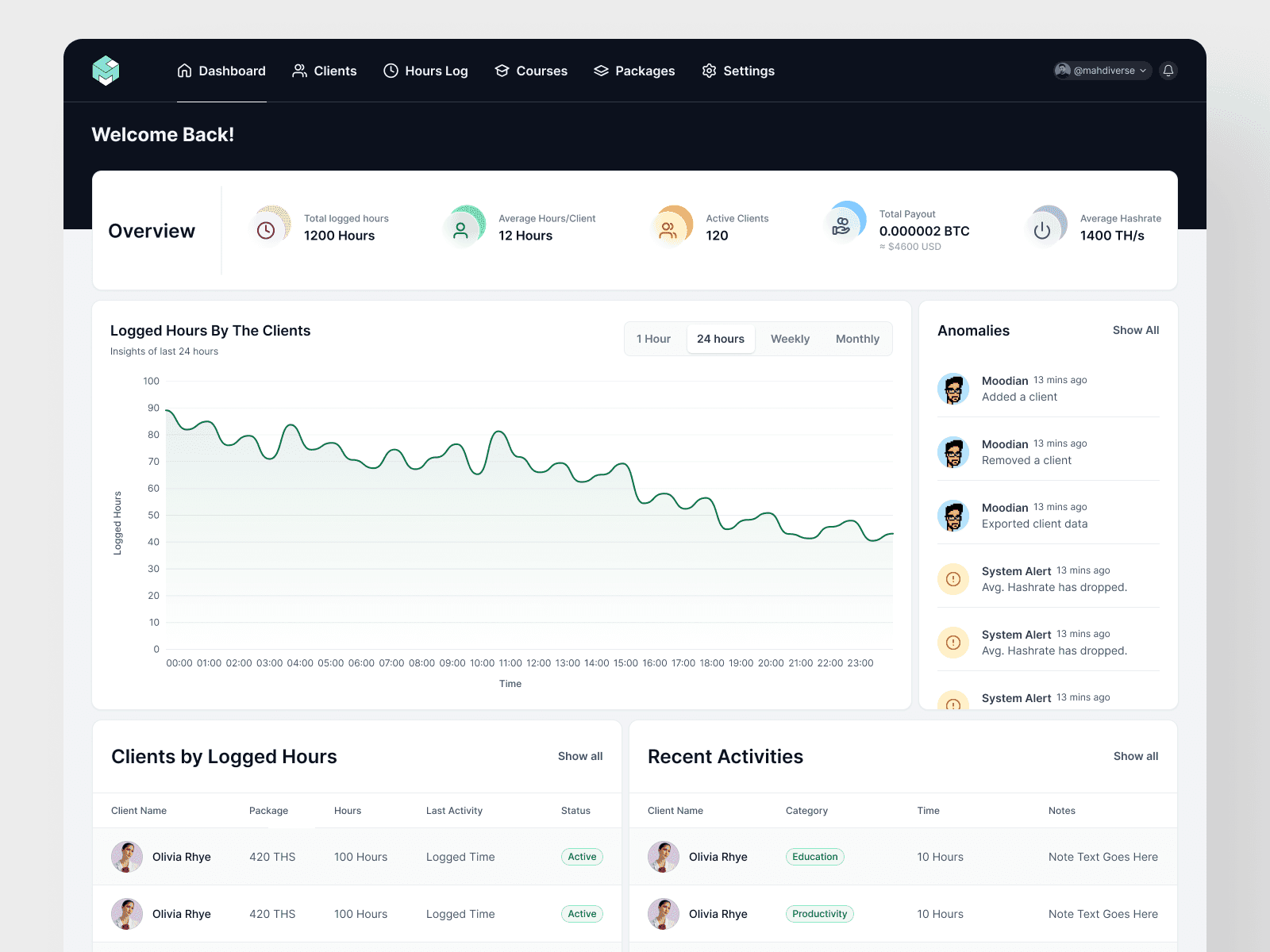

Leveraged Mining is a web portal that helps Bitcoin mining clients document 100+ hours of business participation per year while also giving them a simple way to monitor mining performance, learn through curated content, and reach support. I designed the client product and admin system end to end, prioritizing credibility and consistent behavior over automation.

My Role

Role: Product Designer (End to End)

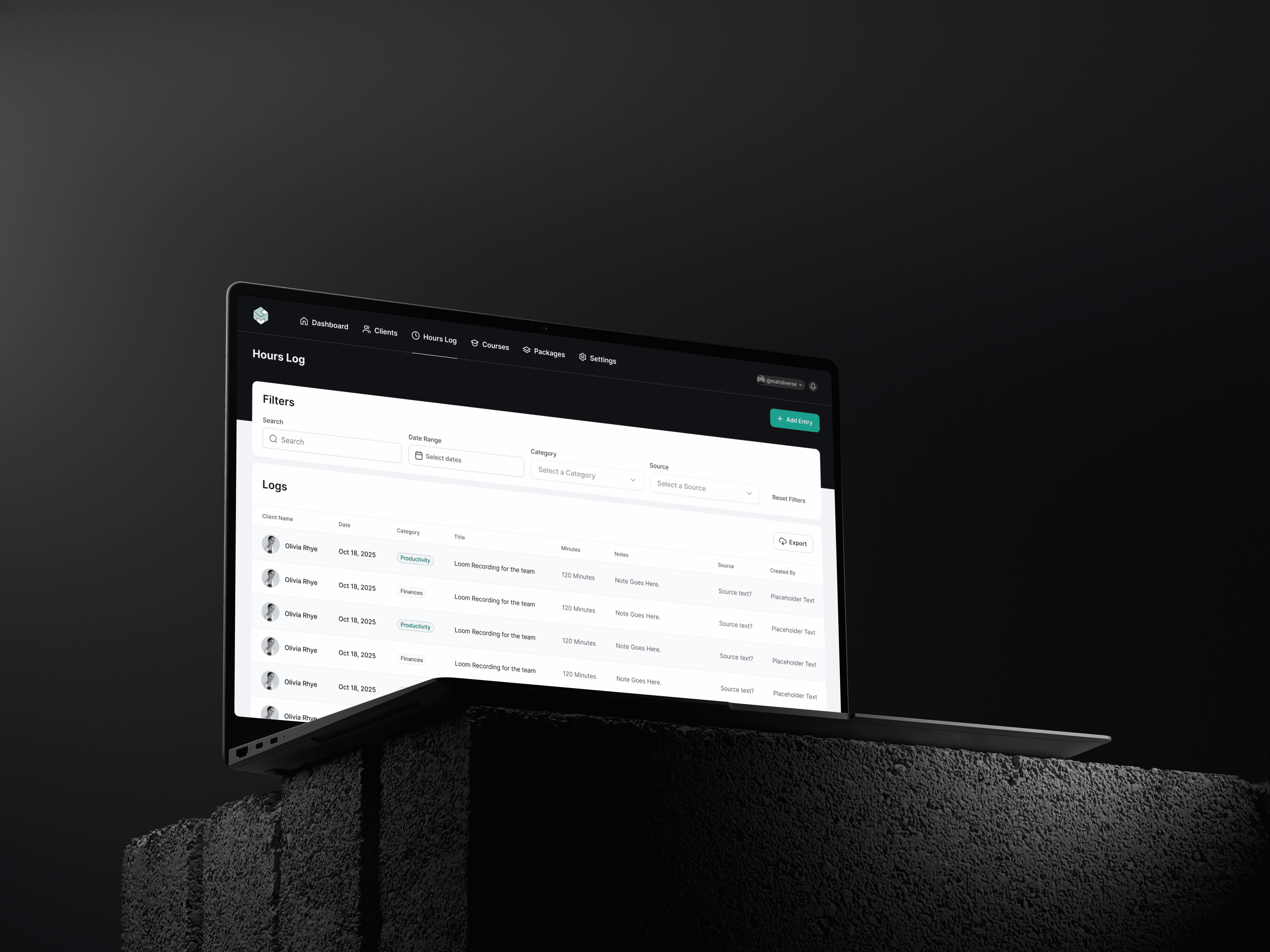

Platform: Web (desktop + mobile responsive)

Phase: MVP and V1

Scope: Client portal and internal admin dashboard

Core work: UX research, information architecture, interaction design, system patterns, admin tooling, iteration based on early usage signals

The Problem

Bitcoin mining clients in the U.S. face a documentation requirement: to qualify for common tax treatment, they often need to prove material participation, including maintaining a credible record of work performed over the year (commonly framed as 100+ hours).

The issue was not that clients were inactive. Most clients already:

check dashboards and payouts

review uptime and incidents

make decisions about expansion

learn about mining operations and strategy

communicate with hosting and support

The issue was that this activity was not documented consistently. Logs were scattered across spreadsheets, calendars, email threads, and memory. When tax season arrived, many clients tried to reconstruct months of work retroactively, producing records that were hard to defend.

Core problem: a credibility gap created by inconsistent documentation.

Research and What We Learned

Research was lightweight and embedded in real workflows rather than formal studies. I focused on understanding where documentation breaks down.

Research inputs

onboarding and support conversations with clients

review of recurring compliance-related questions and tickets

observation of how clients described their work and decisions

Key findings

Participation happens in short sessions (often 15 to 45 minutes).

Logging is delayed until it feels urgent, then becomes inaccurate.

Many users are unsure what qualifies as participation, so they under-log.

Automation reduces trust in this context. Users do not want a system that appears to manufacture hours.

These findings shaped both what we built and what we intentionally did not build.

Goals and Success Metrics

Product goals

Make participation logging easy enough to do consistently all year

Reduce ambiguity around what to log

Produce records that look credible and defensible over time

Keep the product simple enough to support as an MVP

Research-informed success metrics

Instead of focusing on usage vanity metrics, we defined metrics that reflect real documentation behavior:

Behavior metrics

Weekly logging frequency per client

Time to log a single activity

Ratio of same-day logs vs backfilled entries

Category usage rate (categories vs unstructured entries)

Outcome metrics

Percentage of clients reaching 100 hours before year-end

Reduction in compliance-related support questions

Constraints That Shaped the Design

This product evolved across two phases. The MVP focused on validating behavior change and credibility. V1 focused on improving clarity, robustness, and internal visibility based on early usage patterns.

Core constraints across both phases included:

Web-based delivery

Manual logging by design

Audit credibility prioritized over convenience

Logging needed to stay under one minute

Additional V1 constraints:

Preserve existing user behavior while improving structure

Avoid introducing features that weaken trust

Improve admin visibility without adding client-side friction

Users and Use Cases

Primary users: mining clients

risk-aware, time-conscious

not full-time operators, but actively engaged

need a reliable record without administrative overhead

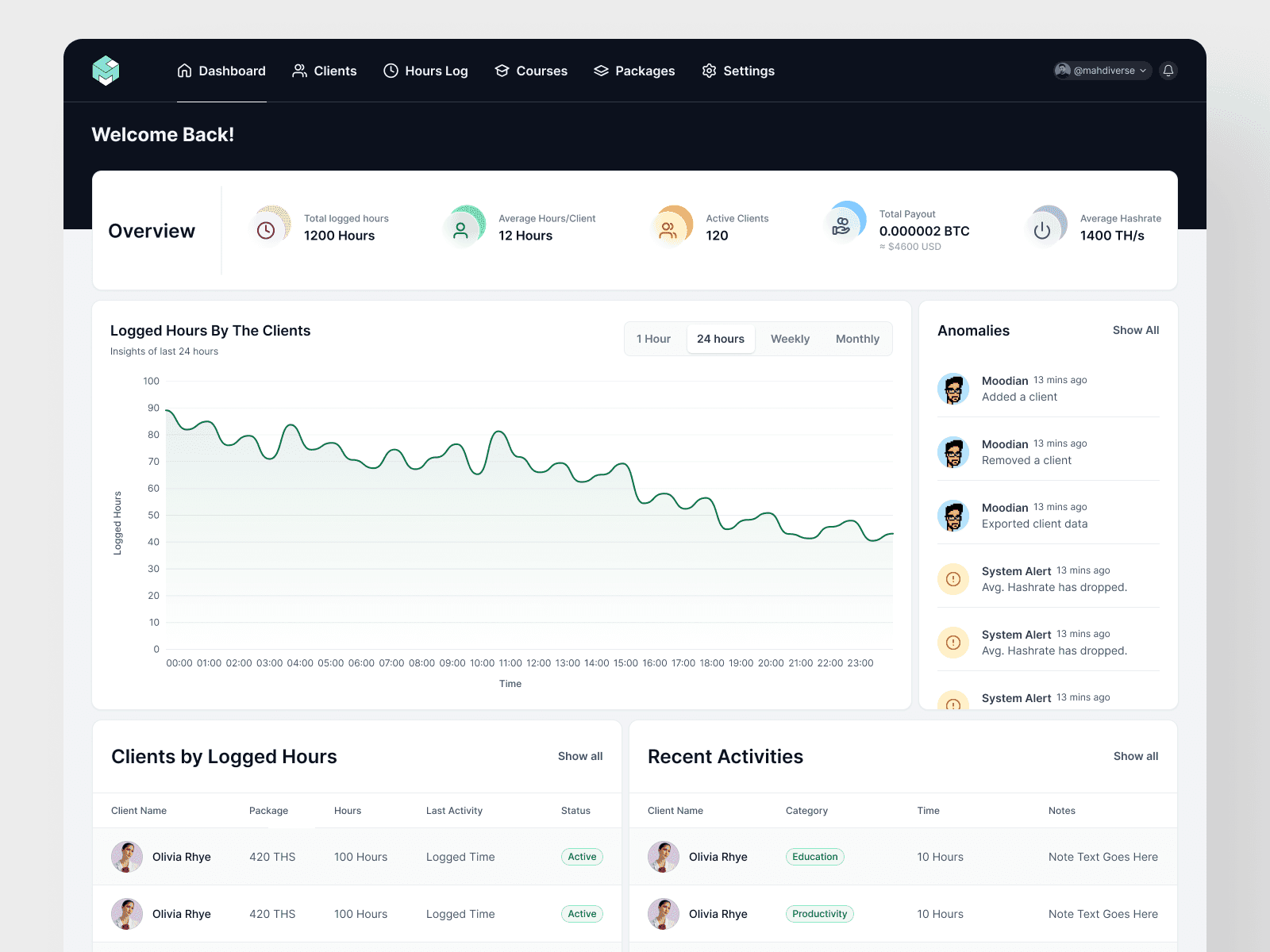

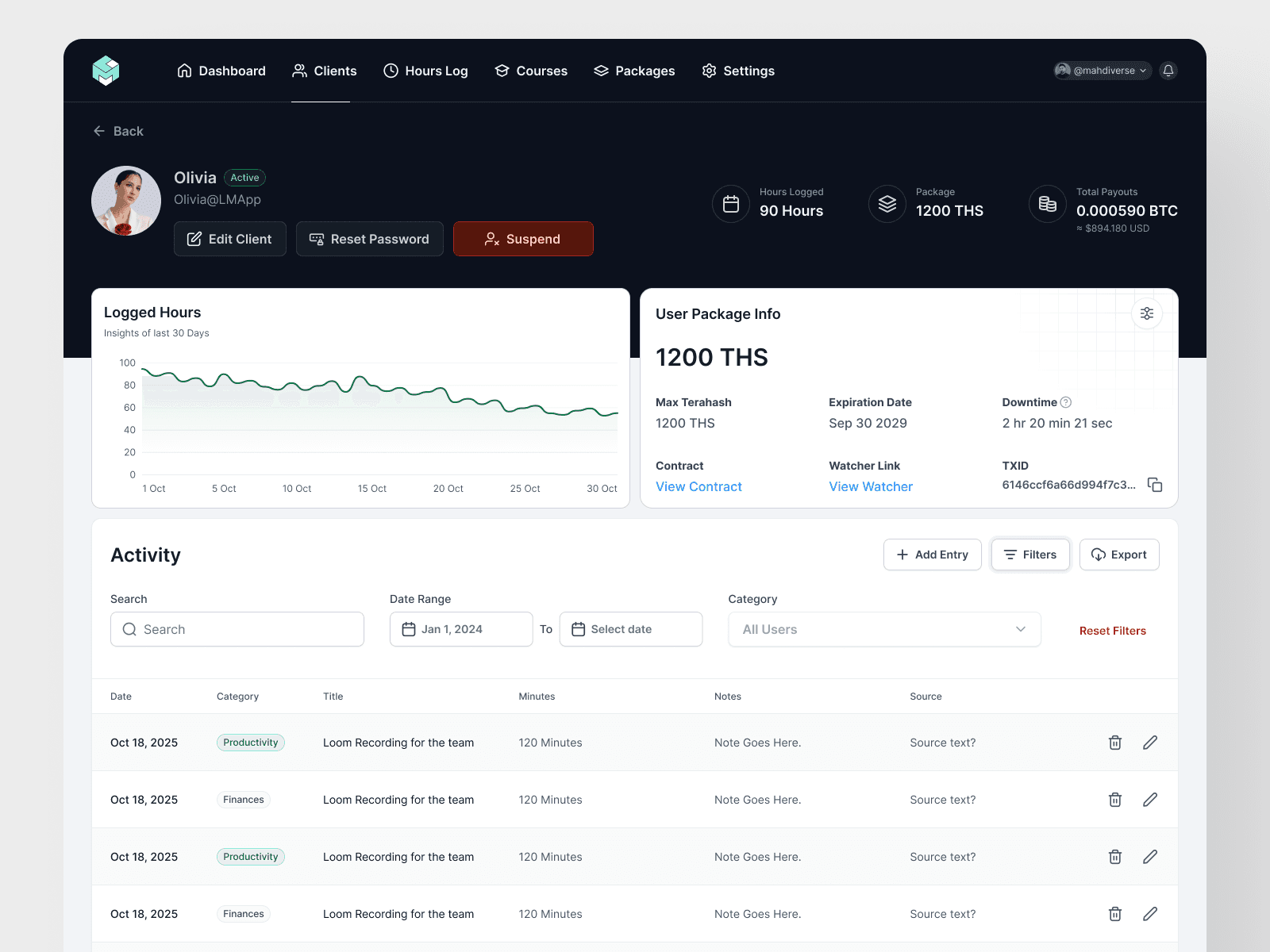

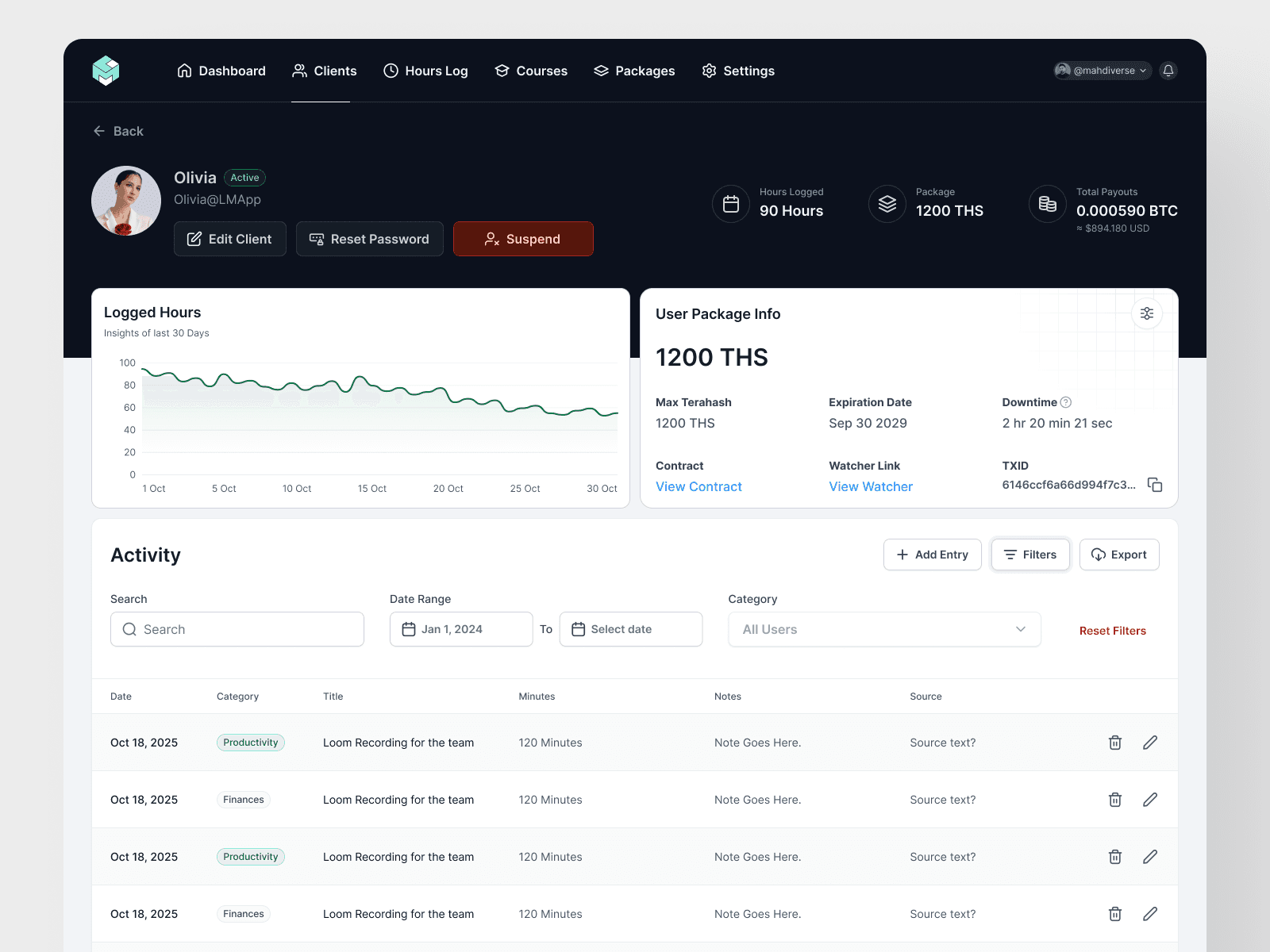

Secondary users: internal admins

monitor participation patterns across clients

support audit readiness

manage content, categories, and client accounts

identify suspicious or risky logging behavior

Core use cases (client)

Log operational oversight and business work

Monitor performance and payouts

Learn through curated mining content

Reach support when issues arise

Track progress toward annual participation goals

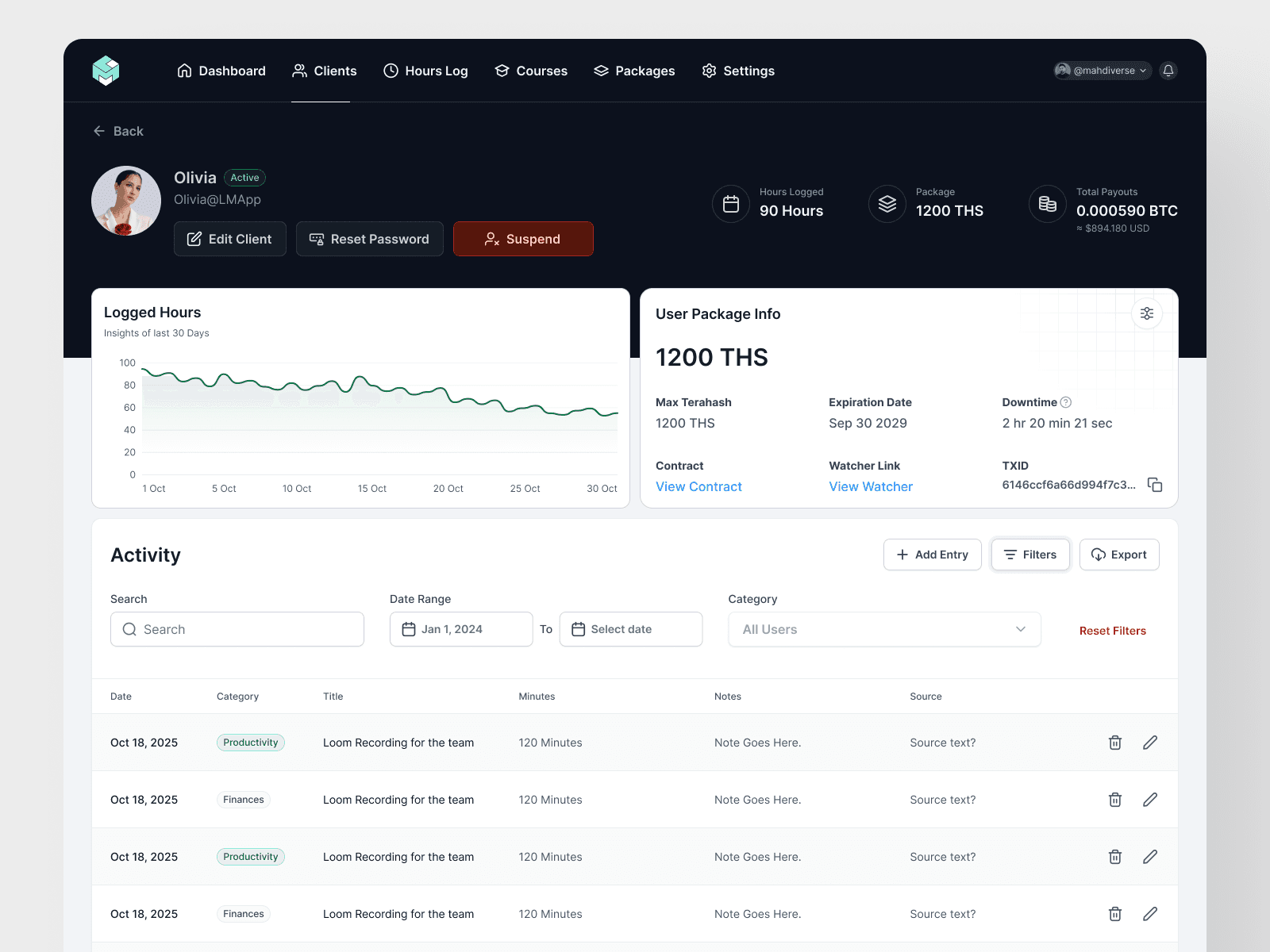

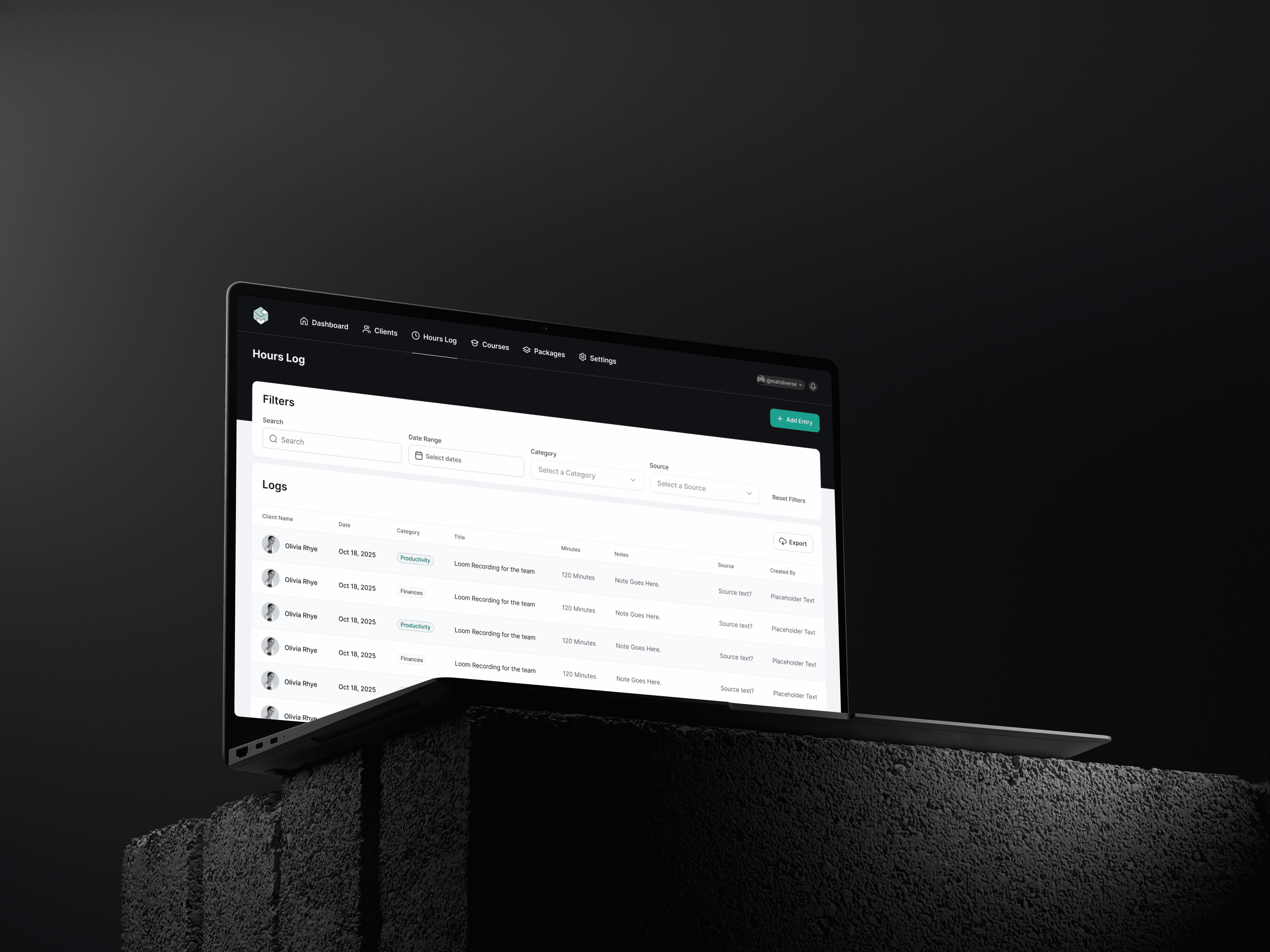

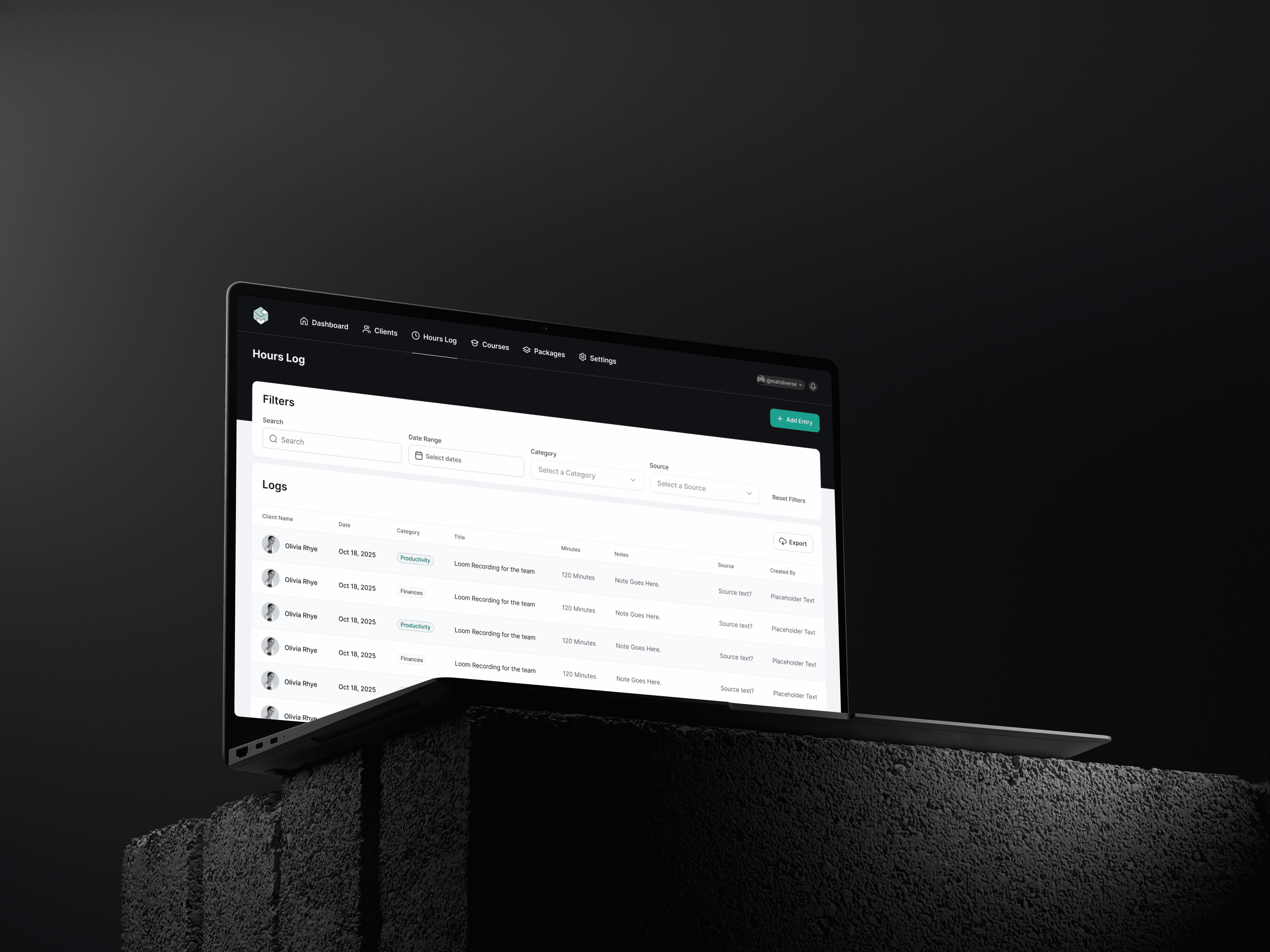

Core use cases (admin)

Review activity patterns per client

Flag anomalies and investigate entries

Export records when requested

Maintain audit trails of edits and changes

Product Strategy Across MVP and V1

The product strategy unfolded in two deliberate stages.

MVP focus

The MVP was designed to answer one question:

Will users log participation consistently if ambiguity and friction are removed?

The MVP prioritized:

A simple participation logbook

Clear activity categories

Visible progress toward the annual goal

Read-only mining performance visibility

Basic support and expansion entry points

Automation, bulk backdating, and advanced reporting were intentionally excluded to protect credibility.

V1 focus

V1 built on validated MVP behavior and focused on:

Improving clarity and consistency of logged data

Strengthening admin oversight and audit readiness

Reducing edge-case ambiguity without restricting users

V1 additions emphasized system quality rather than feature expansion.

Information Architecture

The structure was built around a simple mental model clients already have:

What have I done?

How close am I to the goal?

What should I do next?

Logging and progress were central. Supporting features were always accessible but never dominant.

On the admin side, the structure mirrored the client model so internal reviews aligned with what clients actually see and do. This reduced confusion and helped maintain credibility during reviews.

Key UX Flows

1) Logging an activity (primary flow)

The most important flow was designed around speed and clarity.

Design choices

default date set to today to reduce input

categories to reduce ambiguity and improve consistency

free-text description for narrative detail

optional notes for evidence and context

immediate feedback and recent entries visible after logging

UX target: under one minute per entry.

2) Progress visibility (reinforcement)

Progress toward the annual goal is always visible. The UI is intentionally neutral:

no gamification

no pressure cues

no reward language

The goal is to encourage steady behavior without encouraging inflated entries.

3) Mining dashboard (supporting legitimacy)

Mining stats are presented as read-only, focused on oversight. This makes routine monitoring easier and keeps complexity out of the MVP.

Tradeoffs and Integrity Safeguards

This product required balancing usability with accountability.

Editing and deleting entries

Users can correct mistakes, but changes are tracked internally. This supports honest usage while keeping records explainable.

Anomalies are flagged, not blocked

Instead of hard limits, extreme entries are flagged for review. This avoids punishing legitimate edge cases while still surfacing risk patterns.

Manual logging is a feature here

Manual input reinforces intent and produces more credible records than passive automation, aligning with the product’s core job.

Admin Tooling

Admin tooling became a stronger focus in V1 after validating the core client behavior during MVP. As logging volume increased, internal visibility and explainability became critical to maintaining trust.

V1 improvements emphasized:

Better global visibility across clients

Clearer anomaly detection patterns

Stronger audit trails around edits and changes

More reliable export workflows

The admin system evolved alongside the client product, ensuring internal teams could confidently support audits and compliance questions.

Outcomes and Learnings

Impact signals we used

Across MVP and V1, success was evaluated through behavior signals rather than feature usage. MVP validated that consistent logging was possible when ambiguity was removed. V1 focused on reinforcing that behavior at scale and improving internal confidence in the data.

What V1 validated

Logging behavior remained consistent without adding automation

Clear categories improved data quality over time

Admin visibility reduced reactive compliance support

Trust was preserved by avoiding convenience shortcuts

What this project reinforced

Consistency beats intensity. Small actions recorded regularly win.

Convenience can erode trust in regulated contexts.

Restraint is a design skill, especially in MVPs.

Admin tooling is critical for long-term product integrity.

If I Took This to V2

Better exports (PDF/CSV) built for accountant workflows

Optional evidence attachments (with clear guidelines)

More proactive nudges that encourage consistency without pressuring inflation

Deeper admin analytics to spot disengagement early

V2 would build on V1 stability rather than expanding scope prematurely.

This project reinforced that strong product design is often about restraint rather than addition. By focusing on reducing ambiguity instead of automating behavior, Leveraged Mining helped turn work clients were already doing into records they could trust. Moving from MVP to V1 validated that credibility, consistency, and internal visibility matter more than feature breadth in regulated contexts. The result is a system designed not just to be used, but to hold up over time.

Check out some of my recent projects.

Leveraged Mining

Designing a compliance-first mining management portal

TL;DR

Leveraged Mining is a web portal that helps Bitcoin mining clients document 100+ hours of business participation per year while also giving them a simple way to monitor mining performance, learn through curated content, and reach support. I designed the client product and admin system end to end, prioritizing credibility and consistent behavior over automation.

My Role

Role: Product Designer (End to End)

Platform: Web (desktop + mobile responsive)

Phase: MVP and V1

Scope: Client portal and internal admin dashboard

Core work: UX research, information architecture, interaction design, system patterns, admin tooling, iteration based on early usage signals

The Problem

Bitcoin mining clients in the U.S. face a documentation requirement: to qualify for common tax treatment, they often need to prove material participation, including maintaining a credible record of work performed over the year (commonly framed as 100+ hours).

The issue was not that clients were inactive. Most clients already:

check dashboards and payouts

review uptime and incidents

make decisions about expansion

learn about mining operations and strategy

communicate with hosting and support

The issue was that this activity was not documented consistently. Logs were scattered across spreadsheets, calendars, email threads, and memory. When tax season arrived, many clients tried to reconstruct months of work retroactively, producing records that were hard to defend.

Core problem: a credibility gap created by inconsistent documentation.

Research and What We Learned

Research was lightweight and embedded in real workflows rather than formal studies. I focused on understanding where documentation breaks down.

Research inputs

onboarding and support conversations with clients

review of recurring compliance-related questions and tickets

observation of how clients described their work and decisions

Key findings

Participation happens in short sessions (often 15 to 45 minutes).

Logging is delayed until it feels urgent, then becomes inaccurate.

Many users are unsure what qualifies as participation, so they under-log.

Automation reduces trust in this context. Users do not want a system that appears to manufacture hours.

These findings shaped both what we built and what we intentionally did not build.

Goals and Success Metrics

Product goals

Make participation logging easy enough to do consistently all year

Reduce ambiguity around what to log

Produce records that look credible and defensible over time

Keep the product simple enough to support as an MVP

Research-informed success metrics

Instead of focusing on usage vanity metrics, we defined metrics that reflect real documentation behavior:

Behavior metrics

Weekly logging frequency per client

Time to log a single activity

Ratio of same-day logs vs backfilled entries

Category usage rate (categories vs unstructured entries)

Outcome metrics

Percentage of clients reaching 100 hours before year-end

Reduction in compliance-related support questions

Constraints That Shaped the Design

This product evolved across two phases. The MVP focused on validating behavior change and credibility. V1 focused on improving clarity, robustness, and internal visibility based on early usage patterns.

Core constraints across both phases included:

Web-based delivery

Manual logging by design

Audit credibility prioritized over convenience

Logging needed to stay under one minute

Additional V1 constraints:

Preserve existing user behavior while improving structure

Avoid introducing features that weaken trust

Improve admin visibility without adding client-side friction

Users and Use Cases

Primary users: mining clients

risk-aware, time-conscious

not full-time operators, but actively engaged

need a reliable record without administrative overhead

Secondary users: internal admins

monitor participation patterns across clients

support audit readiness

manage content, categories, and client accounts

identify suspicious or risky logging behavior

Core use cases (client)

Log operational oversight and business work

Monitor performance and payouts

Learn through curated mining content

Reach support when issues arise

Track progress toward annual participation goals

Core use cases (admin)

Review activity patterns per client

Flag anomalies and investigate entries

Export records when requested

Maintain audit trails of edits and changes

Product Strategy Across MVP and V1

The product strategy unfolded in two deliberate stages.

MVP focus

The MVP was designed to answer one question:

Will users log participation consistently if ambiguity and friction are removed?

The MVP prioritized:

A simple participation logbook

Clear activity categories

Visible progress toward the annual goal

Read-only mining performance visibility

Basic support and expansion entry points

Automation, bulk backdating, and advanced reporting were intentionally excluded to protect credibility.

V1 focus

V1 built on validated MVP behavior and focused on:

Improving clarity and consistency of logged data

Strengthening admin oversight and audit readiness

Reducing edge-case ambiguity without restricting users

V1 additions emphasized system quality rather than feature expansion.

Information Architecture

The structure was built around a simple mental model clients already have:

What have I done?

How close am I to the goal?

What should I do next?

Logging and progress were central. Supporting features were always accessible but never dominant.

On the admin side, the structure mirrored the client model so internal reviews aligned with what clients actually see and do. This reduced confusion and helped maintain credibility during reviews.

Key UX Flows

1) Logging an activity (primary flow)

The most important flow was designed around speed and clarity.

Design choices

default date set to today to reduce input

categories to reduce ambiguity and improve consistency

free-text description for narrative detail

optional notes for evidence and context

immediate feedback and recent entries visible after logging

UX target: under one minute per entry.

2) Progress visibility (reinforcement)

Progress toward the annual goal is always visible. The UI is intentionally neutral:

no gamification

no pressure cues

no reward language

The goal is to encourage steady behavior without encouraging inflated entries.

3) Mining dashboard (supporting legitimacy)

Mining stats are presented as read-only, focused on oversight. This makes routine monitoring easier and keeps complexity out of the MVP.

Tradeoffs and Integrity Safeguards

This product required balancing usability with accountability.

Editing and deleting entries

Users can correct mistakes, but changes are tracked internally. This supports honest usage while keeping records explainable.

Anomalies are flagged, not blocked

Instead of hard limits, extreme entries are flagged for review. This avoids punishing legitimate edge cases while still surfacing risk patterns.

Manual logging is a feature here

Manual input reinforces intent and produces more credible records than passive automation, aligning with the product’s core job.

Admin Tooling

Admin tooling became a stronger focus in V1 after validating the core client behavior during MVP. As logging volume increased, internal visibility and explainability became critical to maintaining trust.

V1 improvements emphasized:

Better global visibility across clients

Clearer anomaly detection patterns

Stronger audit trails around edits and changes

More reliable export workflows

The admin system evolved alongside the client product, ensuring internal teams could confidently support audits and compliance questions.

Outcomes and Learnings

Impact signals we used

Across MVP and V1, success was evaluated through behavior signals rather than feature usage. MVP validated that consistent logging was possible when ambiguity was removed. V1 focused on reinforcing that behavior at scale and improving internal confidence in the data.

What V1 validated

Logging behavior remained consistent without adding automation

Clear categories improved data quality over time

Admin visibility reduced reactive compliance support

Trust was preserved by avoiding convenience shortcuts

What this project reinforced

Consistency beats intensity. Small actions recorded regularly win.

Convenience can erode trust in regulated contexts.

Restraint is a design skill, especially in MVPs.

Admin tooling is critical for long-term product integrity.

If I Took This to V2

Better exports (PDF/CSV) built for accountant workflows

Optional evidence attachments (with clear guidelines)

More proactive nudges that encourage consistency without pressuring inflation

Deeper admin analytics to spot disengagement early

V2 would build on V1 stability rather than expanding scope prematurely.

This project reinforced that strong product design is often about restraint rather than addition. By focusing on reducing ambiguity instead of automating behavior, Leveraged Mining helped turn work clients were already doing into records they could trust. Moving from MVP to V1 validated that credibility, consistency, and internal visibility matter more than feature breadth in regulated contexts. The result is a system designed not just to be used, but to hold up over time.

Check out some of my recent projects.

Leveraged Mining

Designing a compliance-first mining management portal

TL;DR

Leveraged Mining is a web portal that helps Bitcoin mining clients document 100+ hours of business participation per year while also giving them a simple way to monitor mining performance, learn through curated content, and reach support. I designed the client product and admin system end to end, prioritizing credibility and consistent behavior over automation.

My Role

Role: Product Designer (End to End)

Platform: Web (desktop + mobile responsive)

Phase: MVP and V1

Scope: Client portal and internal admin dashboard

Core work: UX research, information architecture, interaction design, system patterns, admin tooling, iteration based on early usage signals

The Problem

Bitcoin mining clients in the U.S. face a documentation requirement: to qualify for common tax treatment, they often need to prove material participation, including maintaining a credible record of work performed over the year (commonly framed as 100+ hours).

The issue was not that clients were inactive. Most clients already:

check dashboards and payouts

review uptime and incidents

make decisions about expansion

learn about mining operations and strategy

communicate with hosting and support

The issue was that this activity was not documented consistently. Logs were scattered across spreadsheets, calendars, email threads, and memory. When tax season arrived, many clients tried to reconstruct months of work retroactively, producing records that were hard to defend.

Core problem: a credibility gap created by inconsistent documentation.

Research and What We Learned

Research was lightweight and embedded in real workflows rather than formal studies. I focused on understanding where documentation breaks down.

Research inputs

onboarding and support conversations with clients

review of recurring compliance-related questions and tickets

observation of how clients described their work and decisions

Key findings

Participation happens in short sessions (often 15 to 45 minutes).

Logging is delayed until it feels urgent, then becomes inaccurate.

Many users are unsure what qualifies as participation, so they under-log.

Automation reduces trust in this context. Users do not want a system that appears to manufacture hours.

These findings shaped both what we built and what we intentionally did not build.

Goals and Success Metrics

Product goals

Make participation logging easy enough to do consistently all year

Reduce ambiguity around what to log

Produce records that look credible and defensible over time

Keep the product simple enough to support as an MVP

Research-informed success metrics

Instead of focusing on usage vanity metrics, we defined metrics that reflect real documentation behavior:

Behavior metrics

Weekly logging frequency per client

Time to log a single activity

Ratio of same-day logs vs backfilled entries

Category usage rate (categories vs unstructured entries)

Outcome metrics

Percentage of clients reaching 100 hours before year-end

Reduction in compliance-related support questions

Constraints That Shaped the Design

This product evolved across two phases. The MVP focused on validating behavior change and credibility. V1 focused on improving clarity, robustness, and internal visibility based on early usage patterns.

Core constraints across both phases included:

Web-based delivery

Manual logging by design

Audit credibility prioritized over convenience

Logging needed to stay under one minute

Additional V1 constraints:

Preserve existing user behavior while improving structure

Avoid introducing features that weaken trust

Improve admin visibility without adding client-side friction

Users and Use Cases

Primary users: mining clients

risk-aware, time-conscious

not full-time operators, but actively engaged

need a reliable record without administrative overhead

Secondary users: internal admins

monitor participation patterns across clients

support audit readiness

manage content, categories, and client accounts

identify suspicious or risky logging behavior

Core use cases (client)

Log operational oversight and business work

Monitor performance and payouts

Learn through curated mining content

Reach support when issues arise

Track progress toward annual participation goals

Core use cases (admin)

Review activity patterns per client

Flag anomalies and investigate entries

Export records when requested

Maintain audit trails of edits and changes

Product Strategy Across MVP and V1

The product strategy unfolded in two deliberate stages.

MVP focus

The MVP was designed to answer one question:

Will users log participation consistently if ambiguity and friction are removed?

The MVP prioritized:

A simple participation logbook

Clear activity categories

Visible progress toward the annual goal

Read-only mining performance visibility

Basic support and expansion entry points

Automation, bulk backdating, and advanced reporting were intentionally excluded to protect credibility.

V1 focus

V1 built on validated MVP behavior and focused on:

Improving clarity and consistency of logged data

Strengthening admin oversight and audit readiness

Reducing edge-case ambiguity without restricting users

V1 additions emphasized system quality rather than feature expansion.

Information Architecture

The structure was built around a simple mental model clients already have:

What have I done?

How close am I to the goal?

What should I do next?

Logging and progress were central. Supporting features were always accessible but never dominant.

On the admin side, the structure mirrored the client model so internal reviews aligned with what clients actually see and do. This reduced confusion and helped maintain credibility during reviews.

Key UX Flows

1) Logging an activity (primary flow)

The most important flow was designed around speed and clarity.

Design choices

default date set to today to reduce input

categories to reduce ambiguity and improve consistency

free-text description for narrative detail

optional notes for evidence and context

immediate feedback and recent entries visible after logging

UX target: under one minute per entry.

2) Progress visibility (reinforcement)

Progress toward the annual goal is always visible. The UI is intentionally neutral:

no gamification

no pressure cues

no reward language

The goal is to encourage steady behavior without encouraging inflated entries.

3) Mining dashboard (supporting legitimacy)

Mining stats are presented as read-only, focused on oversight. This makes routine monitoring easier and keeps complexity out of the MVP.

Tradeoffs and Integrity Safeguards

This product required balancing usability with accountability.

Editing and deleting entries

Users can correct mistakes, but changes are tracked internally. This supports honest usage while keeping records explainable.

Anomalies are flagged, not blocked

Instead of hard limits, extreme entries are flagged for review. This avoids punishing legitimate edge cases while still surfacing risk patterns.

Manual logging is a feature here

Manual input reinforces intent and produces more credible records than passive automation, aligning with the product’s core job.

Admin Tooling

Admin tooling became a stronger focus in V1 after validating the core client behavior during MVP. As logging volume increased, internal visibility and explainability became critical to maintaining trust.

V1 improvements emphasized:

Better global visibility across clients

Clearer anomaly detection patterns

Stronger audit trails around edits and changes

More reliable export workflows

The admin system evolved alongside the client product, ensuring internal teams could confidently support audits and compliance questions.

Outcomes and Learnings

Impact signals we used

Across MVP and V1, success was evaluated through behavior signals rather than feature usage. MVP validated that consistent logging was possible when ambiguity was removed. V1 focused on reinforcing that behavior at scale and improving internal confidence in the data.

What V1 validated

Logging behavior remained consistent without adding automation

Clear categories improved data quality over time

Admin visibility reduced reactive compliance support

Trust was preserved by avoiding convenience shortcuts

What this project reinforced

Consistency beats intensity. Small actions recorded regularly win.

Convenience can erode trust in regulated contexts.

Restraint is a design skill, especially in MVPs.

Admin tooling is critical for long-term product integrity.

If I Took This to V2

Better exports (PDF/CSV) built for accountant workflows

Optional evidence attachments (with clear guidelines)

More proactive nudges that encourage consistency without pressuring inflation

Deeper admin analytics to spot disengagement early

V2 would build on V1 stability rather than expanding scope prematurely.

This project reinforced that strong product design is often about restraint rather than addition. By focusing on reducing ambiguity instead of automating behavior, Leveraged Mining helped turn work clients were already doing into records they could trust. Moving from MVP to V1 validated that credibility, consistency, and internal visibility matter more than feature breadth in regulated contexts. The result is a system designed not just to be used, but to hold up over time.